A big part of building distributed computer systems is delivering proof they actually work. Besides a live demo with shiny front-end and a polished slide deck, raw numbers are ultimately necessary to show that promises of robust availability, high throughput and low latency are kept during real-world use. And sometimes you may need numbers for debugging as well.

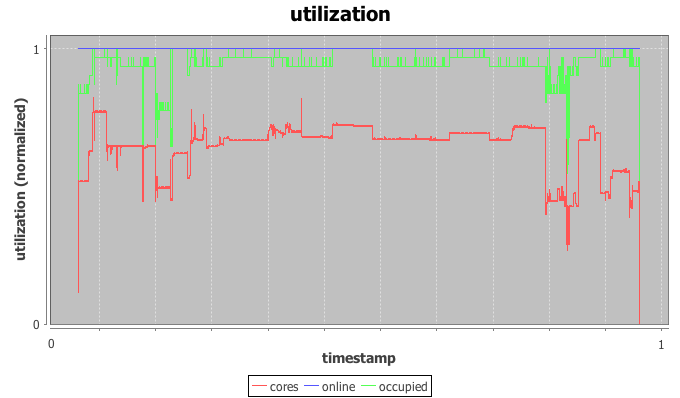

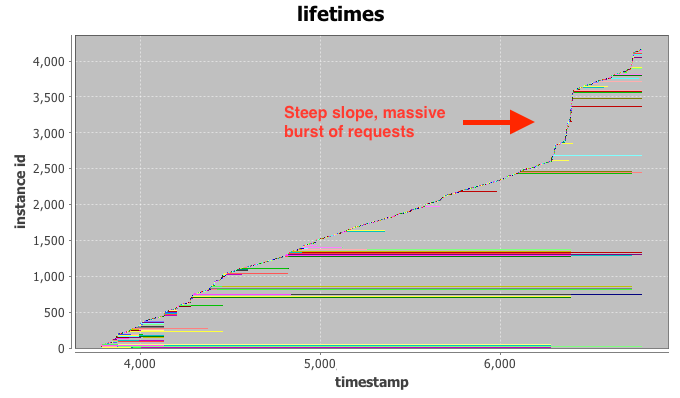

My clear personal favorite for data analysis and visualization (and light programming) is Python, and in extension IPython Notebook, matplotlib, seaborn and the time-series analysis framework Pandas. Their integration has become seamless over the past years and they are very well suited for pretty much any task from quickly visualizing application logs to in-depth looks at time series and performing statistical inference. As examples for successful use of these tools I can offer our recent work on validated simulation of IaaS clouds and SLAs for spot instances. When looking closely at these publications the astute reader will find the giveaways of graphs generated with these tools.

If you haven’t used IPython notebook yet, I highly recommend you invest 1-2 hours in getting familiar with the basics. Personally, it took some time to overcome my internal inertia and finally spend the time necessary – and I haven’t looked back since. It makes life quite a bit easier. I also had the opportunity earlier this year to talk to Brian Granger – one of the master minds behind IPython – and heard about the plans for expanding IPython’s scope with project Jupyter. I’m excited to see what’s coming down the pipeline in terms of high-performance analytics for those lengthy production log files we have sitting around.

Pandas had a steep learning curve for me as well, but it took some time to get my head around some of the intermediate indexing and slicing techniques. As I figured this out, however, productivity shot through the roof. Importing text, csv, json and xml? No problem. Join three different data sets on different columns and get aggregate statistics a la SQL? Check. Plot intermediate results to debug heavy scipy use? Quick and easy. Things that took hours before get done in minutes now. It was well worth spending an afternoon to get familiar with it.

Despite all this greatness, there’s a caveat. For presentation slides I still find myself falling back on Microsoft Excel for most visualization. Yes, I know my coolness factor just took a hit. The WYSIWYG (“what-you-see-is-what-you-get”) formatting capabilities are still more time-efficient than figuring out the various corner-cases of matplotlib calls. That being said, I usually prepare the data plotted with Excel using the aforementioned Python tools.

Probably the easiest way to get it all set up is a pre-configured Python distribution such as Continuum Anaconda. An installation from scratch with pip and co is possible as well, but depending on your platform you will end up dealing with version conflicts manually. In case you run into any roadblocks there’s a solid user base for all these tools. This means that stackexchange.com is an invaluable resource for troubleshooting in addition to the official IPython and Pandas docs.